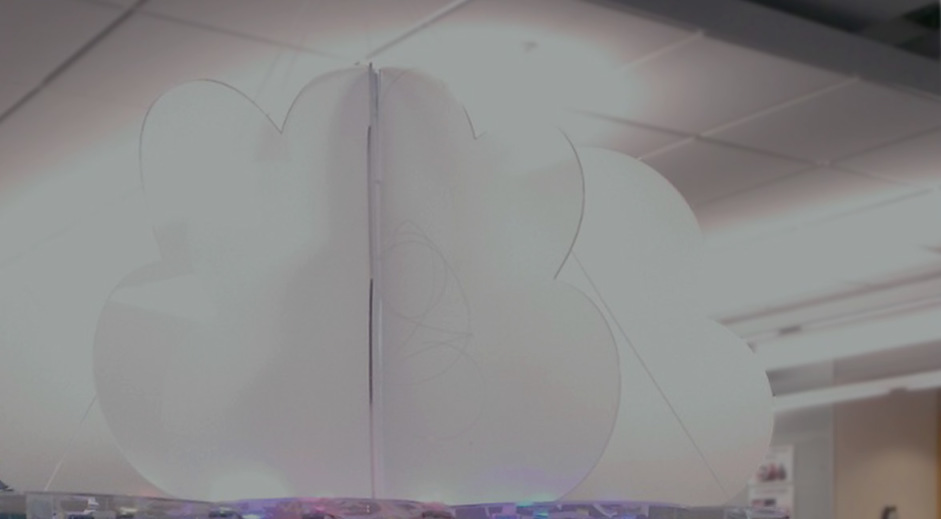

MoodCloud

Tangible UI / Sound Installation

MoodCloud is a sound and light installation that was done as a part of Microsoft Research’s MakeFest 2014. This installation allows the viewer to understand the emotional content as well as activity levels around any searched topic over time.

Beyond word clouds

Different than word clouds, which only provides a snapshot in time and doesn’t give an indication of the emotions around the topics, MoodCloud allows for an ambient experience where viewers can, over time, see and listen to the activity of topics on Twitter.

For a certain topic, we search the most popular tweets about the topic and each tweet gets assigned a mood as they get mentioned live.

Custom designed sounds

The sounds for this installation were custom designed by me to reflect 8 different moods: fatigue, fear, guilt, hostility, joviality, positivity sadness and serenity. We used sentiment analysis software to identify the main emotion of a tweet and then assigned the corresponding sound to be played when the message went live. This way we had a symphony played live by twitter messages.

Below are the sounds used in sequence:

Here is a video of me demonstrating it at Microsoft Research’s MakeFest 2014:

After its appearance in MakeFest, MoodCloud was selected to be part of Microsoft Employee Art Exhibit and it was in display from June till September/2014.

Project core team:

Melissa Quintanilha – Concept, sound, physical and web design, project lead

Shelly Farnham – Twitter search and sentiment analysis integration

Jaime Teevan – Physical design, bulb frosting, wire splicing and all around helpfulness

Ivan Judson – Lights and other coding wonders